- Tensorflow sequential how to#

- Tensorflow sequential full#

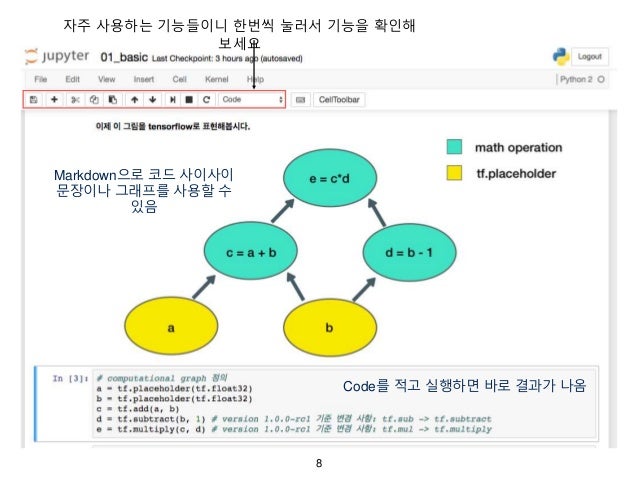

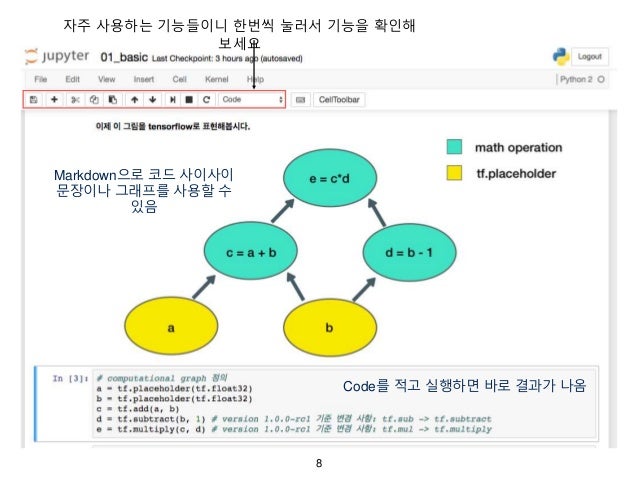

Plug the TFDS input pipeline into a simple Keras model, compile the model, and train it.

Caching is done after batching because batches can be the same between epochs.ĭs_test = ds_test.prefetch(tf.data.AUTOTUNE). Your testing pipeline is similar to the training pipeline with small differences: Normalize_img, num_parallel_calls=tf.data.AUTOTUNE)ĭs_train = ds_train.shuffle(ds_examples)ĭs_train = ds_train.prefetch(tf.data.AUTOTUNE) Return tf.cast(image, tf.float32) / 255., label tf.: It is good practice to end the pipeline by prefetching for performance. tf.: Batch elements of the dataset after shuffling to get unique batches at each epoch. Note: For large datasets that can't fit in memory, use buffer_size=1000 if your system allows it. Tensorflow sequential full#

tf.: For true randomness, set the shuffle buffer to the full dataset size.Note: Random transformations should be applied after caching. tf. As you fit the dataset in memory, cache it before shuffling for a better performance.tf.: TFDS provide images of type tf.uint8, while the model expects tf.float32.

11:14:48.760452: E tensorflow/compiler/xla/stream_executor/cuda/cuda_:266] failed call to cuInit: CUDA_ERROR_NO_DEVICE: no CUDA-capable device is detected (ds_train, ds_test), ds_info = tfds.load(

as_supervised=True: Returns a tuple (img, label) instead of a dictionary. shuffle_files=True: The MNIST data is only stored in a single file, but for larger datasets with multiple files on disk, it's good practice to shuffle them when training. Load the MNIST dataset with the following arguments: The Better performance with the tf.data API guide. Start by building an efficient input pipeline using advices from:

as_supervised=True: Returns a tuple (img, label) instead of a dictionary. shuffle_files=True: The MNIST data is only stored in a single file, but for larger datasets with multiple files on disk, it's good practice to shuffle them when training. Load the MNIST dataset with the following arguments: The Better performance with the tf.data API guide. Start by building an efficient input pipeline using advices from: Tensorflow sequential how to#

This simple example demonstrates how to plug TensorFlow Datasets (TFDS) into a Keras model.

0 kommentar(er)

0 kommentar(er)